In modern software delivery, speed is essential to stay competitive. Agile methodologies and DevOps culture have enabled organizations to deliver as fast as multiple times per day. But to achieve this level of speed, teams need to rely on automation when taking a piece of software from development to production. The steps to do this are known as the “delivery pipeline.”

What, exactly, is a pipeline? In Kristian Erbou’s book, Build Better Software: How to Improve Digital Product Quality and Organizational Performace, the DevOps expert defines a pipeline as “the sequence of activities you execute deterministically, one activity at a time, in the form of a workflow configured in your Continuous Integration and Delivery platform.”

In this article, we’ll focus on the steps required for a deployment pipeline, why it’s important to do it right and end with some tips on how you can speed it up.

A Deployment Pipeline Depends on a Strong Build Pipeline

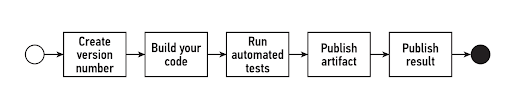

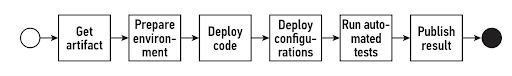

In software deployment, there are two types of pipelines: build and deployment. The two are closely related: the build pipeline comes first and its outcome is an input for the deployment pipeline, as we can see on this figure:

Build Pipeline

Deployment Pipeline

Therefore, to achieve fast and successful deployments, you need to not only optimize your deployment pipeline but also your build one. You can read our tips on doing so in this article.

Deployment Pipeline Step by Step

As we’ve seen in the figure above, a deployment pipeline goes through a series of steps. Let’s look at them in more detail.

1. Retrieve Artifact

The first step in a deployment pipeline is to retrieve the artifact you want to deploy, which enables you to cherry-pick the elements and files you need to deploy on the exact node you are working on.

While this seems simple, be wary of an anti-pattern that happens over and over: always retrieve your artifact directly from your artifact repository and not from another environment. If you fall for the anti-pattern, you create unnecessary dependencies that might slow down and complicate your deployment.

For example, if you always retrieve your artifact from the Test environment, you are creating a dependency: the Test environment becomes a prerequisite for a Live deployment.

2. Prepare your Environment

A “fail fast” strategy is essential in software development, as it allows you to get feedback and validate assumptions often, adjusting your strategy continuously. This also applies to your deployment pipeline.

Therefore, before proceeding any further, validate the state of your environment and fail the deployment altogether if the current state doesn’t satisfy expectations.

These validations include, for example, verifying that the correct version of a software package is installed on a node, or validating third-party dependencies, such as APIs.

However, it might happen that you and your team don’t have full control over your infrastructure, having to depend on other sectors of the organization. Not only does this create dependencies, but it also inflicts friction and damages the organization as a whole when responsibilities are divided between different branches without agreements on expectations. Thus, this should be an issue addressed by leadership, and we suggest two ways to tackle it:

- Agree on a model-based upon collaboration between departments

- Grant the development team accountability for all layers in the model

Always consider giving each of your teams a dedicated environment, all the way from their development machines to a Live stage. Yes, it costs more, but it’s a good investment because it will reduce the impact of the issues we just listed.

3. Deploy State Changes

Deploying state changes means changing the state on your nodes, for example by copying files into a new location once the node has been validated to be eligible for subsequent deployment.

To achieve a state change, there may be a long series of sub-activities, dedicated to a specific task in relation to changing state on individual nodes. You should do all this in advance, and test as much as needed, to assure that you have the ideal state before starting the state changes. This way, you will keep complexity and variance to a minimum, improving the odds of a successful deployment.

4. Deploy Configurations

After deploying state changes, you need to deploy environment-specific configurations. A typical example is API access, which normally has a username and password specific to each environment. You may have to extract these credentials and replace them after you have deployed state changes onto your nodes.

Deploying configurations differ from deploying the state because the former consists of executing the same operation regardless of the environment and the latter takes into consideration the environment you’re working on.

5. Run Automated Tests

After deployment and successful change of your nodes comes testing. You need to make sure that everything went well. For that purpose, you may run some automated smoke tests that will show you whether or not the system responds as expected.

These tests don’t need to be complex, but try to simulate how an end-user interacts with the system. Consider technologies such as Selenium to help you run automated tests at this point.

6. Publish Result

After a deployment, you should inform stakeholders about the result of your state change. The result can either be successful or unsuccessful but, either way, the message should be shared.

The Importance of Doing it Right

Why would you want to speed up your deployment pipeline? Couldn’t you just let it run in the back, regardless of the time it takes? There are many reasons why you should strive towards a fast and efficient pipeline.

Having an automated pipeline is only possible when your processes are standardized, you can’t automate something that changes all the time. Variance equals complexity, and complexity equals risk. Therefore, you should aim at standardizing your processes, just so then you can automate your pipelines.

Failing to do so will likely result in a poor outcome for everyone involved, from developers to QA, managers, directors, and the whole organization. Humans, as amazing as they are, are also flawed. They will make mistakes, even without intending. So why rely on possible human error when you can depend on machines to make sure your pipelines run as smoothly as possible?

Having a fast pipeline means you get feedback fast too. And based on it, you get to know which of the changes you introduced were successful and take action in regards to the ones that weren’t. Besides reducing failure in the first place, automation also reduces recovery time when it happens.

Let’s look at a real example of how an organization can benefit from automated pipelines, by taking the case of HNI Corporation.

Before, the organization struggled with issues that slowed down the pipeline: manual migration processes were error-prone and lacked visibility, the team didn’t have a clear picture of the execution timeline, and custom scripts required high maintenance.

HNI automated many of its processes and created pipelines that incorporated scheduling and dependency execution. This combined with integrated toolchains, an agile mindset, and the application of CI/CD led to impressive results: deployment time reduced by 60x, reduction of off-hours support, ability to report on deployment timelines, and improvement on the time from request to deployment.

How to Speed up Your Deployment Pipeline?

The steps to speed up your deployment pipeline are very similar to the ones you can use to speed up your build pipeline, which we describe in detail in this article.

- Measure Your Starting Point

Before you apply any of the following, you should start by timing it to have a base start. In the end, you can time it again and see the impact of these measures. Whereas for a build timeline, you should aim for a maximum of ten minutes, for a deployment one, it’s harder to pinpoint what should be the maximum acceptable number, as it depends on many factors.

When measuring, note down the time that is required for the following steps: retrieving the artifact, preparing the environment, deploying state changes, deploying configurations, running automated tests, and publishing the result.

- Run Parallel Jobs

The main improvement you can make to speed up your deployment pipeline is to run parallel jobs. This might get tricky, but it is also a sure way to speed up your pipeline time. Especially in complex deployments, where you need to update several nodes at the same time, making those updates in parallel will save you time. However, it won’t save you money. Running jobs in parallel usually requires more machine power, but it is very likely that this investment still pays off in the end — it’s a matter of measuring and calculating for your specific case.

- Assess Your Own Case

Other than running jobs in parallel, anything else you might do will be context-specific: a deployment in Kubernetes is different from one in AWS, for example. Therefore, your plan should be to map out your activities during deployment and figure out where you can optimize the most by spending the least effort.

Conclusion

DevOps are always a work in progress. You measure, reflect, take action, measure again. If you don’t reach your goals, you keep the loop going. If you did reach them, you still need to keep measuring regularly.

The customer requires new features or faster delivery times. New team members come in and have better ideas. New technologies emerge that can assist you with pain points. Everything changes constantly, and it’s your job to be on top of these changes if you want to remain relevant in a highly competitive market. If it all seems like too much, we are here to help.

Søren Pedersen

Co-founder of Buildingbettersoftware and Agile Leadership Coach

Søren Pedersen is a strategic leadership consultant and international speaker. With more than fifteen years of software development experience at LEGO, Bang & Olufsen, and Systematic, Pedersen knows how to help clients meet their digital transformation goals by obtaining organizational efficiency, alignment, and quality assurance across organizational hierarchies and value chains. Using Agile methodologies, he specializes in value stream conversion, leadership coaching, and transformation project analysis and execution. He’s spoken at DevOps London, is a contributor for The DevOps Institute, and is a Certified Scrum Master and Product Owner.

Value Stream Optimization?

We specialize in analysing and optimizing value streams.

0 Comments